Developing a Modern Search Stack: Optimizing Elasticsearch’s Boost Values

Authors

Date Published

Share this post

This article is part of a series from Fullscript about how we built our modern search stack. You can find the other articles here.

In the Autumn of 2023, the search stack at Fullscript was very simple - just an Elasticsearch query. The query included multiple fields (e.g. product name, description, ingredients, etc.), each with its own boost value. This is roughly what the query looked like:

1{2 "query": {3 "bool": {4 "should": [5 { "match": { "name": { "query": <query>, "boost": 2.5 } } },6 { "match": { "name.keyword": { "query": <query>, "boost": 4 } } },7 { "match": { "description": { "query": <query>, "boost": 2 } } },8 { "match": { "ingredients": { "query": <query>, "boost": 1.5 } } },9 { "match": { "brand": { "query": <query>, "boost": 1 } } },10 { "match": { "brand.keyword": { "query": <query>, "boost": 3 } } }11 ]12 }13 }14}

At this time, search had not been developed in an overly sophisticated or data-driven way. The entire user query was being matched against each field, which led to false positive matches. The boost values were selected by making many queries to our index and checking that the results looked sensible (manual tuning). For some common user queries, the results were reasonable, but for other queries, many irrelevant products were returned. To provide more relevant results, we started using Quepid and Optuna.

Quepid

Quepid, which is developed by OpenSource Connections, is an open-source service that provides a framework and UI to evaluate the quality of search results. By integrating Quepid with your Elasticsearch index, you can query your index from Quepid and see the returned documents. A subject-matter expert can then build a judgement list by labelling the quality of the search results using a scale of your choice (we chose 0 - 10). These scores are aggregated using a metric, such as NDCG (Normalized Discounted Cumulative Gain), to measure the quality of your Elasticsearch query for the provided search text. Below, we see the labels for the search query “b6” (as in “vitamin b6”). The results are reasonably good with an NDCG value of 0.84.

The NDCG values for each search query can be averaged to produce an overall score to evaluate an Elasticsearch query. Below, we see that the Elasticsearch query had an average NDCG value of 0.77 across the 63 user queries in our judegement list.

To help compare the performance of two Elasticsearch queries, you can look at their results side-by-side.

For each search query, 30 - 100 documents were scored by our subject matter experts. Despite the number of queries not being very large, it was a representative sample as we included a balanced mix of the types of queries, e.g. ingredient-only, ingredient with typo, health condition, brand & supplement type, product name, etc. This means that if our NDCG increased on this dataset, then we could expect a similar increase in most user queries.

Notice that we’re using NDCG@24 as our evaluation metric. NDCG is a standard search evaluation metric and 24 was chosen as users see 24 products on the first page of results on our website. This also saved time for our experts, as they didn’t need to score every result, just those that were in the top-24 of any result set.

Now that we have a representative judgment list, we can use this data to optimize our Elasticsearch query’s boost values to improve the relevancy of our results! To help do this, we used the Python package Optuna.

Optuna

Optuna is a hyperparameter optimization framework. If you provide it with:

- A set of parameters and ranges (min & max Elasticsearch boost values)

- A function (multiply each field’s BM25 score by its boost value, then sum together to produce a relevancy score per search result)

- An evaluation metric (NDCG@24)

- A dataset (containing the BM25 scores and judgment list)

Then it will efficiently sample values for your parameters using Bayesian Optimization to maximize the evaluation metric for your function. You can then use the search results from your optimized query to begin a feedback loop of:

- Updating your Elasticsearch query

- Label new results on Quepid to grow your judgment list

- Optimize parameters with Optuna

Here’s an example of what your Optuna code could look like:

1def run_optuna(n_trials=50):2 def objective(trial: optuna.Trial) -> float:3 name = trial.suggest_float("name", 0.0, 10.0)4 name_keyword = trial.suggest_float("name_keyword", 0.0, 10.0)5 description = trial.suggest_float("description", 0.0, 10.0)6 ingredients = trial.suggest_float("ingredients", 0.0, 10.0)7 brand = trial.suggest_float("brand", 0.0, 10.0)8 brand_keyword = trial.suggest_float("brand_keyword", 0.0, 10.0)910 ndcg_values = []1112 for query in queries:13 es_query = elasticsearch_query(14 query,15 name,16 name_keyword,17 description,18 ingredients,19 brand,20 brand_keyword21 )2223 response = requests.post(24 "https://elastic:url/catalog/_search",25 json=es_query,26 timeout=3027 ).json()2829 hits = response.get("hits", {}).get("hits", [])[:24]30 ranked_doc_ids = [int(h["_id"]) for h in hits]3132 # Ratings for this query33 query_df = df[df["query"] == query]34 query_ratings = query_df.set_index("docid")[["rating"]].to_dict()["rating"]3536 # Score 0 if doc not in ratings37 ranked_ratings = [query_ratings.get(doc_id, 0) for doc_id in ranked_doc_ids]38 ideal_ratings = sorted(query_df["rating"].tolist(), reverse=True)[:24]39 ndcg = ndcg_score(ranked_ratings, ideal_ratings)40 ndcg_values.append(ndcg)4142 return np.mean(ndcg_values)4344 study = optuna.create_study(direction="maximize")45 study.optimize(objective, n_trials=n_trials)4647 print("Best score:", study.best_value)48 print("Best params:", study.best_trial.params)49 return study

After iterating on our query multiple times, we achieved a significant improvement in our nDCG@24. To help speed up your feedback loop, it can be beneficial to only index a subset of your data, if you have a large Elasticsearch index. Once you are nearing the end of the iteration cycle, then you can test with your entire index to better ensure that your results are still valid.

To help you improve and iterate on your Elasticsearch analyzers, we recommend this website (https://elasticsearchanalyzerlab.xyz/), which lets you see how changes to your analyzers affect tokenization in real-time.

Share this post

Related Posts

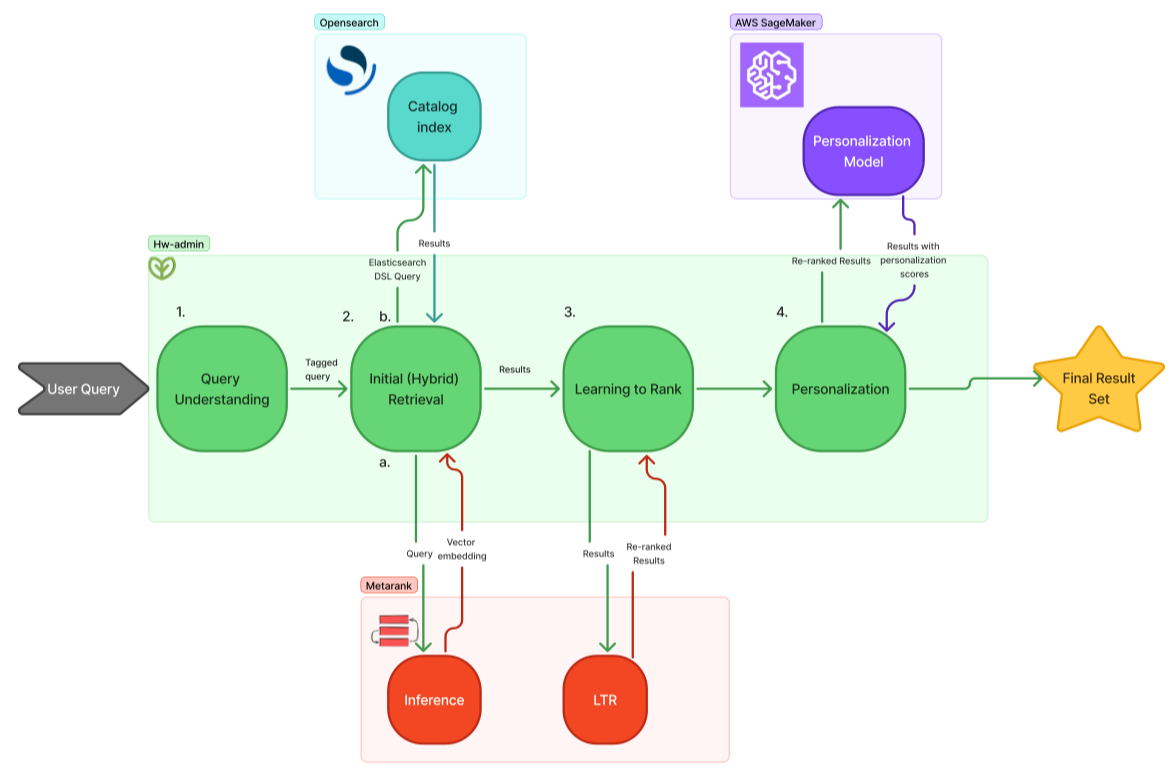

Developing a Modern Search Stack: An Overview

An overview of how Fullscript built a modern search stack to provide more relevant results to its users.

So We Built Our Own Agentic Developer…and then the CEO shipped a feature

Lessons learned from building Nitro, Fullscript's autonomous background agent.