Developing a Modern Search Stack: Learning-to-Rank with Metarank

Authors

Date Published

Share this post

This article is part of a series from Fullscript about how we built our modern search stack. You can find the other articles here.

In part 2 of this blog series, we described how we optimized the boost values for our Elasticsearch query using Quepid and Optuna. Although this helped to make our search results much more relevant, it was clear that we still had a long way to go. One issue with only using an Elasticsearch query to generate search results is that documents’ relevancy scores are always the sum of their BM25 scores multiplied by their boost values, essentially linear regression. This logic is too simplistic. Certain terms in a user’s query can be more/less important depending on the other terms in the query. For example, consider a search engine for a grocery store and the following search terms:

- “Cheese”

- “Cheese Pizza”

- “Vegan Cheese Pizza”

In these examples, “cheese” becomes less important as other terms are added to the search query. Using just an Elasticsearch query to generate these search results could lead to irrelevant products being returned and ranked high. To help us overcome challenges like this, we developed a learning to rank (LTR) service using Metarank, an open-source ranking service.

What is Learning to Rank?

LTR is a family of machine learning techniques to improve the order of search results or recommendations. Instead of manually designing scoring functions (such as optimizing the boost values for an Elasticsearch query), you learn them from the data.

At a high level, an LTR service works by:

- Collecting training data where you know (or can infer) which results are better than others (e.g. using product views, add-to-carts, purchases, etc.)

- Extracting features for each item (text-based similarity scores, prices, popularity, etc.)

- Training a machine learning model to rank results given a query

By adding an LTR service to our search stack, our results were no longer just based on matching terms, but also on users' behaviour. Their actions taught our reranking model which Elasticsearch fields were more important when multiple fields matched a query. Using this more sophisticated logic, our search metrics further improved, as did the feedback from our users.

How Metarank Helped Us Move Faster

To build an LTR service, you need to have collected quite a bit of data:

- Product/document data: name, price, description, ingredients, etc.

- Relevancy data: BM25 scores from Elasticsearch

- User interaction data: clicks, add-to-carts, purchases, etc.

We had this data ready, but we did not have the infrastructure in place to leverage it in an LTR service. This is where Metarank stepped in.

Metarank provides tooling and infrastructure to build and deploy an LTR service. Specifically, it helped us:

- Store and aggregate search data (user queries and clicks) into training data and a judgment list for the machine learning model

- Create features for the machine learning model, e.g. popularity-based features

- Train and serve our machine learning ranking models (based on XGBoost)

Optimizing our LTR service

Just like any other machine learning service, there’s much that can be tuned and optimized. Metarank allows you to export your training and testing data after it has been processed. This allows you to perform feature selection and tune your models’ hyperparameters to achieve better performance. We used Optuna to facilitate the optimization process.

Metarank automatically generates your judgment list based on the weights you assign to interaction events. It’s important to align these weights with their frequencies and your business metrics. For example, an e-commerce website likely has many more product views than purchases, so the purchases’ weight should be set high enough to offset this difference. You could keep things simple by only using one interaction event for your judgment list (e.g. purchases), but you might lose some signal from other events (e.g. product views), which could increase the performance of your search engine.

At Fullscript, our search engine serves multiple types of users. At a high level, they are healthcare practitioners and their patients. These users live in many countries, mainly Canada and the US. We found that having user-type-and-country-specific LTR models best served our users, given their different search behaviours and product availability. This meant that we had to run our model optimization process for each model, but since it’s a process that can be automated, the reward-to-effect ratio is quite high.

Initial Retrieval + LTR

Adding a ranking service after our initial retrieval service (i.e. our Elasticsearch query) allowed us to specialize each service. In our previous article about optimizing Elasticsearch’s boost values, we optimized against NDCG@24. This decision was suitable given we only had one service, and NDCG is a valuable search metric. However, now that we have an initial retrieval and a reranking service, we can optimize our Elasticsearch query against recall and optimize the reranking service against NDCG. This focuses the initial retrieval service on getting relevant products and the reranking service on putting the most relevant products at the top of the results.

You can also optimize your feature set per service. You likely have some features that are relevant in one service but not the other. For example, the price of a product probably doesn’t affect whether it should be returned or not, but it could affect its ranking. By understanding and testing which features belong in which service, your search engine should be even more effective.

Share this post

Related Posts

Developing a Modern Search Stack: An Overview

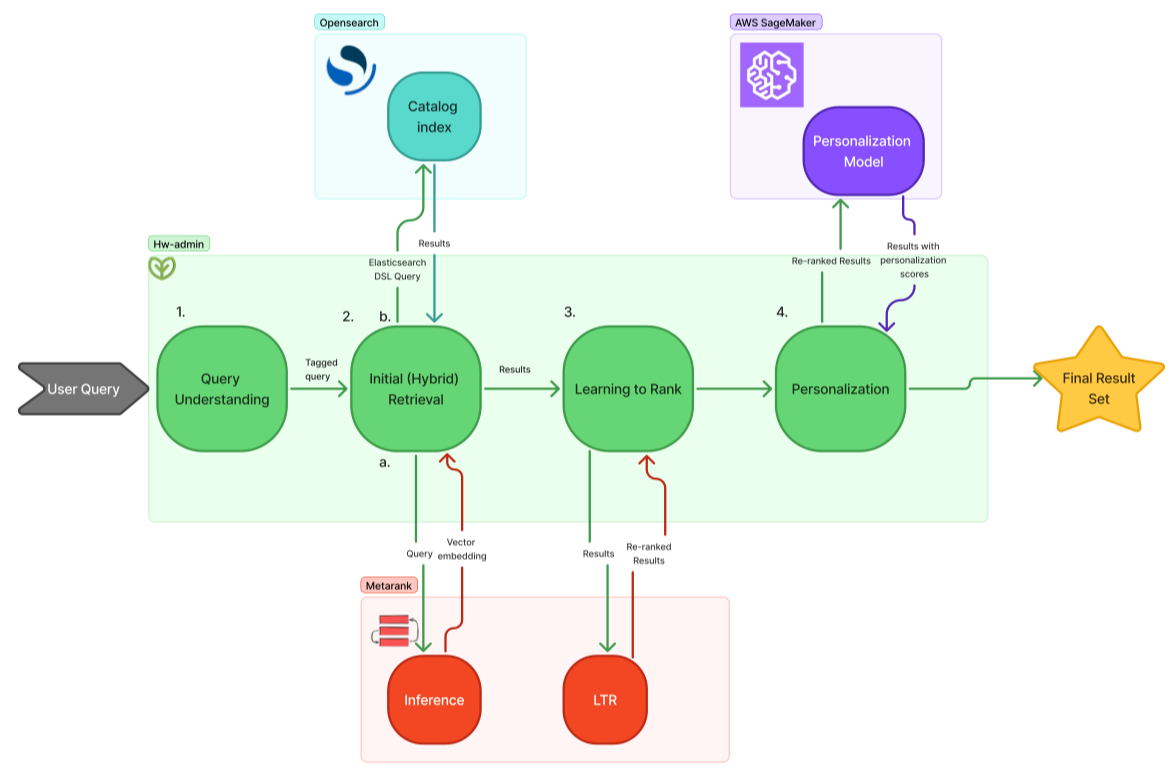

An overview of how Fullscript built a modern search stack to provide more relevant results to its users.

Developing a Modern Search Stack: Optimizing Elasticsearch’s Boost Values

Using Quepid and Optuna to optimize the boost values for your Elasticsearch query and improve your NDCG