Developing a Modern Search Stack: Search Metrics

Authors

Date Published

Share this post

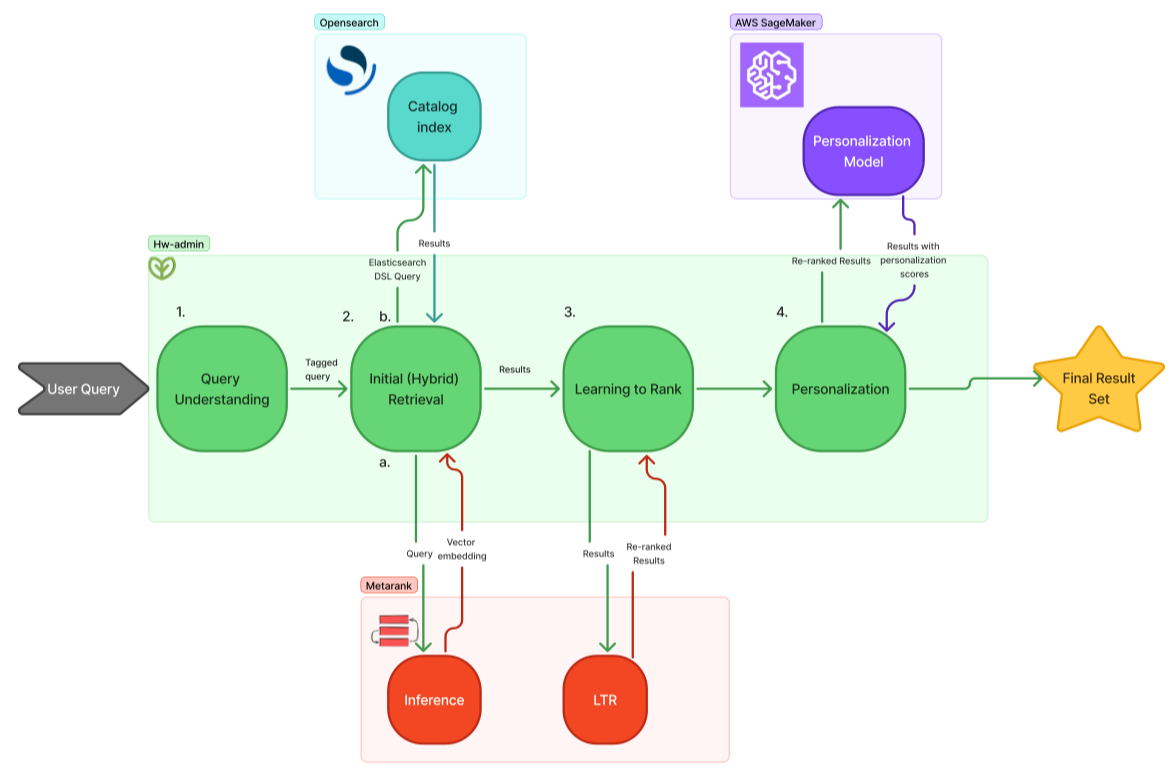

This article is part of a series from Fullscript about how we built our modern search stack. You can find the other articles here.

Before we started modernizing our search stack, we needed a clear way to measure any improvement. Search metrics are how we turn subjective “this looks right” judgments into objective numbers that we can track, compare, and optimize.

When a user searches for something like “protein powder” or “stress support,” we want the most relevant products to appear first. To know whether our system is doing that, we rely on a small set of key metrics: precision, recall, and NDCG. Each metric captures a different aspect of search quality.

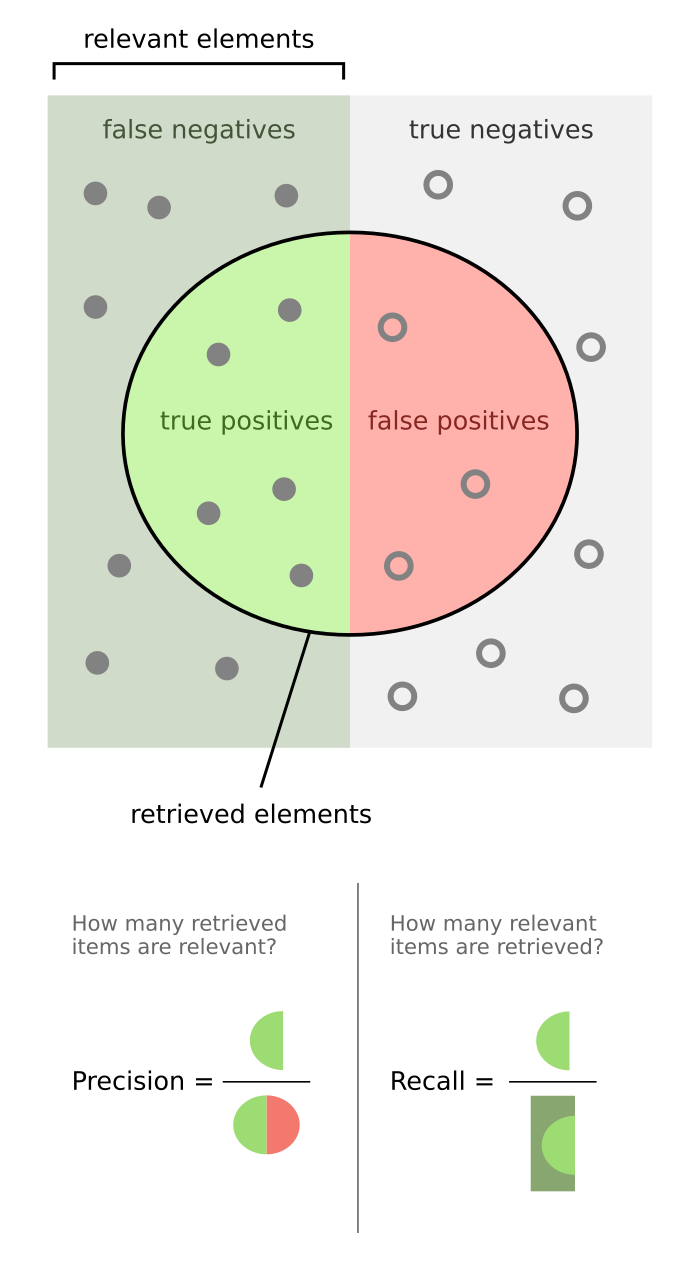

Precision and Recall

Precision and recall are the foundation of search evaluation.

- Precision measures the fraction of search results that are relevant.

- Recall measures the fraction of relevant results that are returned from our index.

Formally:

- Precision = (relevant results retrieved) / (total results retrieved)

- Recall = (relevant results retrieved) / (total relevant results available)

You can think of it like this:

- If a practitioner searches for “vitamin D3” and the top 10 results include 8 vitamin D3 products and 2 irrelevant ones, precision is 0.8.

- If our index contains 12 vitamin D3 products, and we only retrieved 8, recall is 0.67.

Both numbers are important, but they represent different goals.

- High precision means users see clean, accurate results.

- High recall means users do not miss something important that should have shown up.

In the first stage of retrieval, recall is usually the main priority. At this point, the system only needs to gather all the potentially relevant results, not perfectly order them. If recall is too low early on, even the best reranking model cannot fix missing results later.

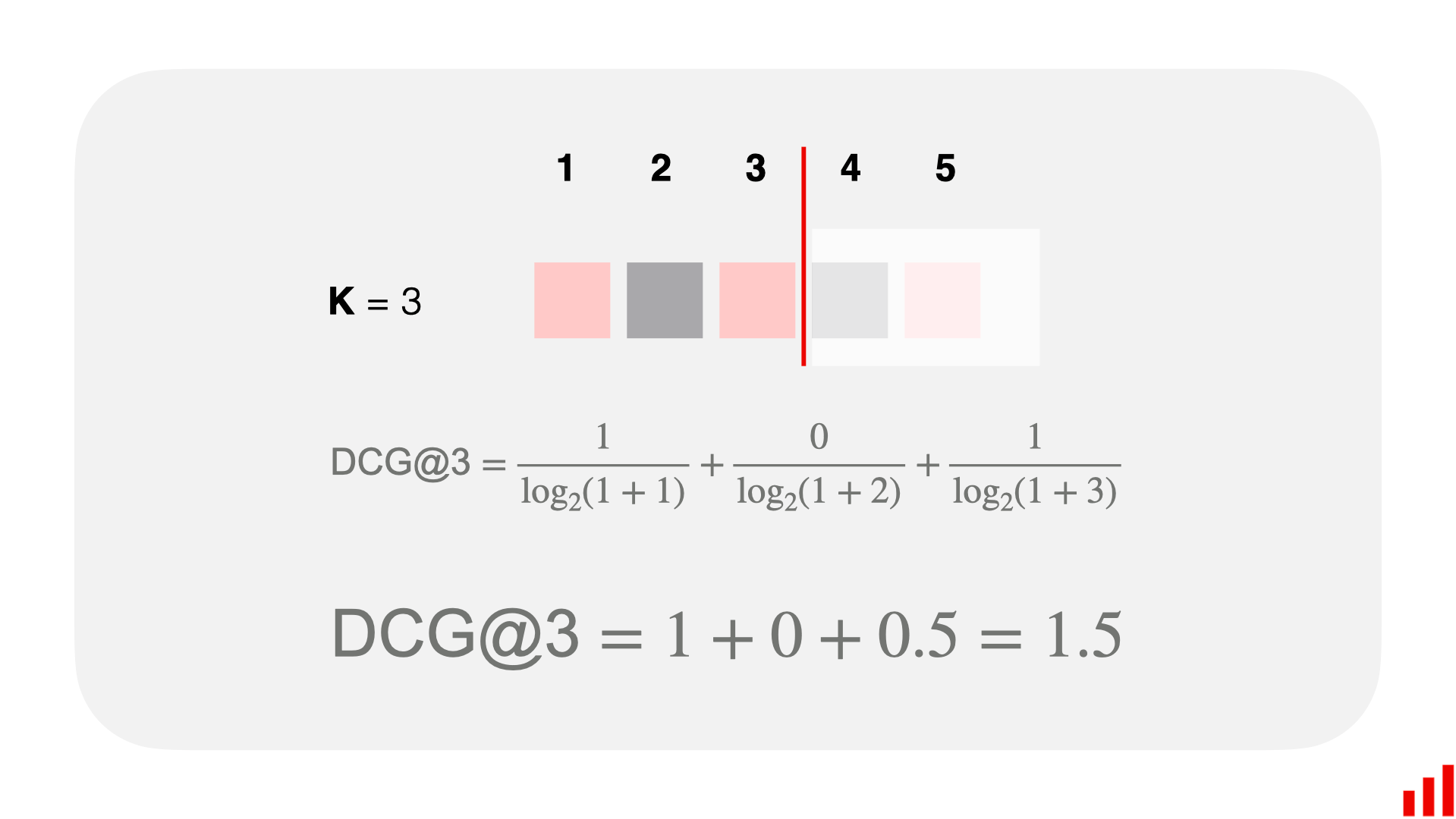

Normalized Discounted Cumulative Gain (NDCG)

Once we have a good candidate set, the next question is: are the best results near the top? That is where NDCG comes in.

NDCG (Normalized Discounted Cumulative Gain) measures ranking quality by looking at both relevance and position. Highly relevant items near the top contribute more to the score, and less relevant items further down contribute less.

For example, if a user searches “omega 3”, they should see actual omega-3 supplements first, not tangential products like multivitamins or unrelated oils. If those highly relevant omega-3 results appear in the first few positions, NDCG will be high. If they are buried halfway down the page, NDCG will drop.

The “discounted” part means lower ranked results affect NDCG less (since users rarely scroll far), and the “normalized” part ensures scores are comparable across queries with different numbers of results and different relevancy scores.

To calculate NDCG, you first need a judgment list. This is a set of search queries where each returned document has been assigned a relevance score. These scores can come from human evaluators, implicit user feedback (such as clicks, add-to-carts, or purchases), or any other labelling process that reflects relevance. Judgment lists serve as ground truth data, allowing metrics like NDCG to be computed objectively. Without these relevance judgments, it would be impossible to measure whether a change to the ranking actually improves quality.

We often evaluate NDCG@24, since users on our platform see 24 products per page. This also simplifies the evaluation process, as our raters or labelling systems only need to score what users actually see.

Share this post

Related Posts

Developing a Modern Search Stack: An Overview

An overview of how Fullscript built a modern search stack to provide more relevant results to its users.

So We Built Our Own Agentic Developer…and then the CEO shipped a feature

Lessons learned from building Nitro, Fullscript's autonomous background agent.